Restful Booker API Testing with Postman

Where to start?

The API layer of any application is one of the most crucial software components. It is the channel which connects client to server (or one microservice to another), drives business processes, and provides the services which give value to users.

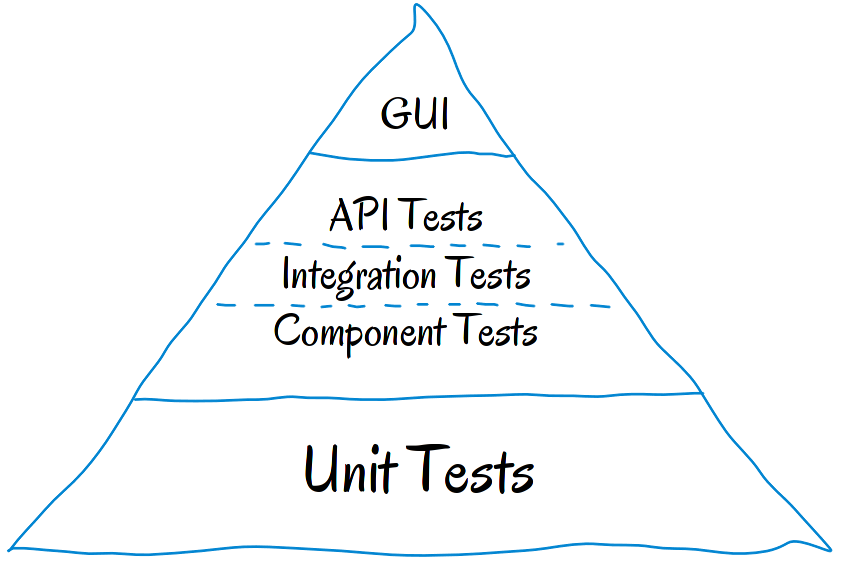

Mike Cohn’s famous Test Pyramid places API tests at the service level (integration), which suggests that around 20% or more of all of our tests should focus on APIs (the exact percentage is less important and varies based on our needs).

API tests are fast, give high ROI, and simplify the validation of business logic, security, compliance, and other aspects of the application. In cases where the API is a public one, providing end-users programmatic access to our application or services, API tests effectively become end-to-end tests and should cover a complete user story.

The importance of API testing is obvious. Several methods and resources help with HOW to test APIs — manual testing, automated testing, test environments, tools, libraries, and frameworks. However, regardless of what you will use — Postman, supertest, pytest, JMeter, mocha, Jasmine, RestAssured, or any other tools of the trade — before coming up with any test method you need to determine what to test…

Main aspects to test our API

Whether you’re thinking of test automation or manual testing, our functional test cases have the same test actions, are part of wider test scenario categories, and belong to three kinds of test flows.

API test actions

Each test is comprised of test actions. These are the individual actions a test needs to take per API test flow. For each API request, the test would need to take the following actions:

- Verify correct HTTP status code. For example, creating a resource should return 201 CREATED and unpermitted requests should return 403 FORBIDDEN, etc.

- Verify response payload. Check valid JSON body and correct field names, types, and values — including in error responses.

- Verify response headers. HTTP server headers have implications on both security and performance.

- Verify correct application state. This is optional and applies mainly to manual testing, or when a UI or another interface can be easily inspected.

- Verify basic performance sanity. If an operation was completed successfully but took an unreasonable amount of time, the test fails.

Test scenario categories

Our test cases fall into the following general test scenario groups:

- Basic positive tests (happy paths)

- Extended positive testing with optional parameters

- Negative testing with valid input

- Negative testing with invalid input

- Destructive testing

- Security, authorization, and permission tests (which are out of the scope of this post)

Happy path tests check basic functionality and the acceptance criteria of the API. We later extend positive tests to include optional parameters and extra functionality. The next group of tests is negative testing where we expect the application to gracefully handle problem scenarios with both valid user input (for example, trying to add an existing username) and invalid user input (trying to add a username which is null). Destructive testing is a deeper form of negative testing where we intentionally attempt to break the API to check its robustness (for example, sending a huge payload body in an attempt to overflow the system).

Test flows

Let’s distinguish between three kinds of test flows which comprise our test plan:

- Testing requests in isolation – Executing a single API request and checking the response accordingly. Such basic tests are the minimal building blocks we should start with, and there’s no reason to continue testing if these tests fail.

- Multi-step workflow with several requests – Testing a series of requests which are common user actions, since some requests can rely on other ones. For example, we execute a POST request that creates a resource and returns an auto-generated identifier in its response. We then use this identifier to check if this resource is present in the list of elements received by a GET request. Then we use a PATCH endpoint to update new data, and we again invoke a GET request to validate the new data. Finally, we DELETE that resource and use GET again to verify it no longer exists.

- Combined API and web UI tests – This is mostly relevant to manual testing, where we want to ensure data integrity and consistency between the UI and API.

We execute requests via the API and verify the actions through the web app UI and vice versa. The purpose of these integrity test flows is to ensure that although the resources are affected via different mechanisms the system still maintains expected integrity and consistent flow.

My Restful Booker API test examples

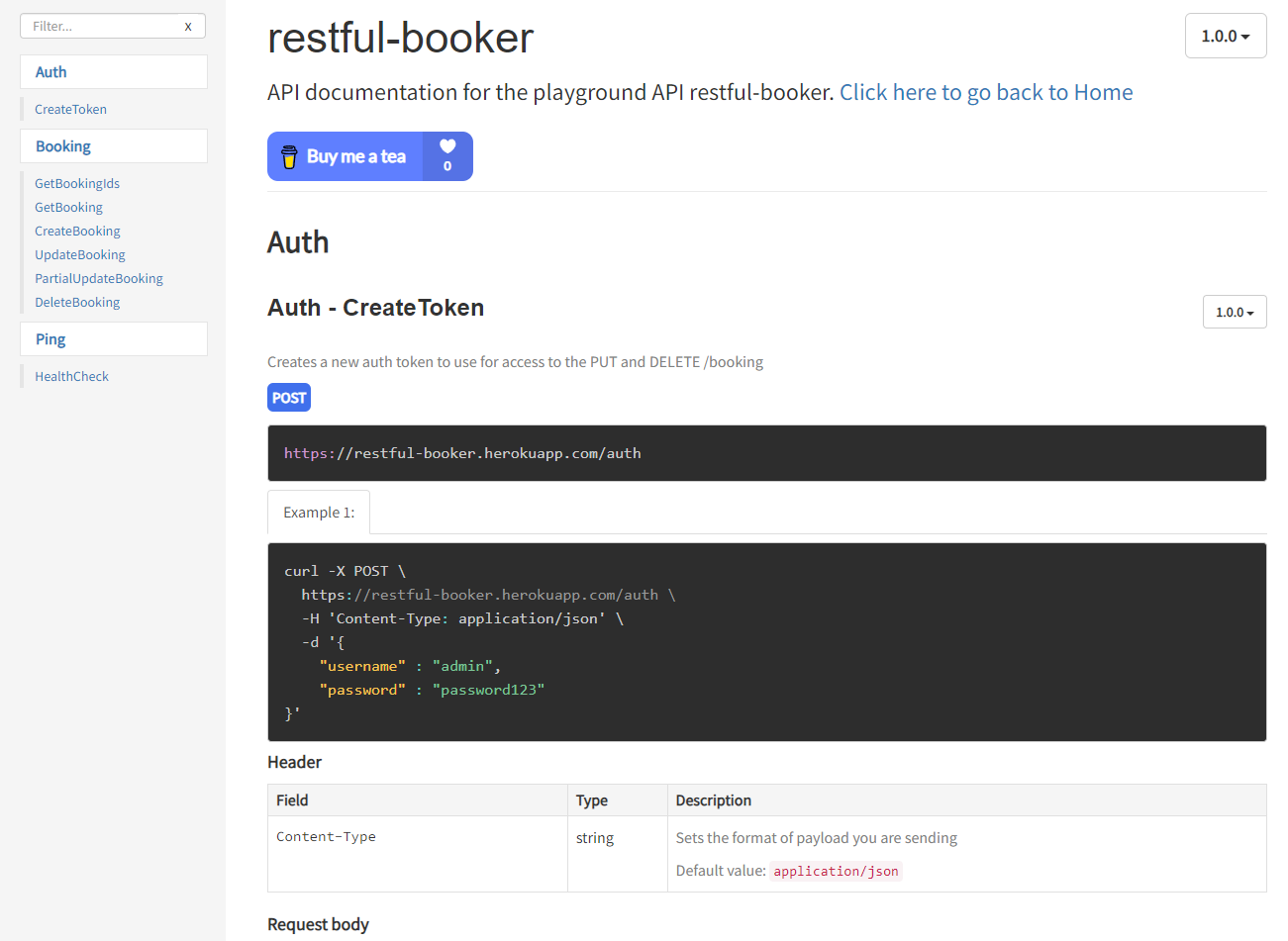

Before we start writing our tests we should check the API documentation and what operations and requests could be used. In my case I tested Restful-booker API, but I'd also found many of them on this GitHub page.

Also we can refer to the API test scenarios based on positive, negative and even desctructive inputs.

Positive tests include any happy path checkings - add/delete bookId and ensure if POST, DELTE, PATCH, PUT and GET methods work as expected. Also we should check if our request doesn't lead to idempotence.

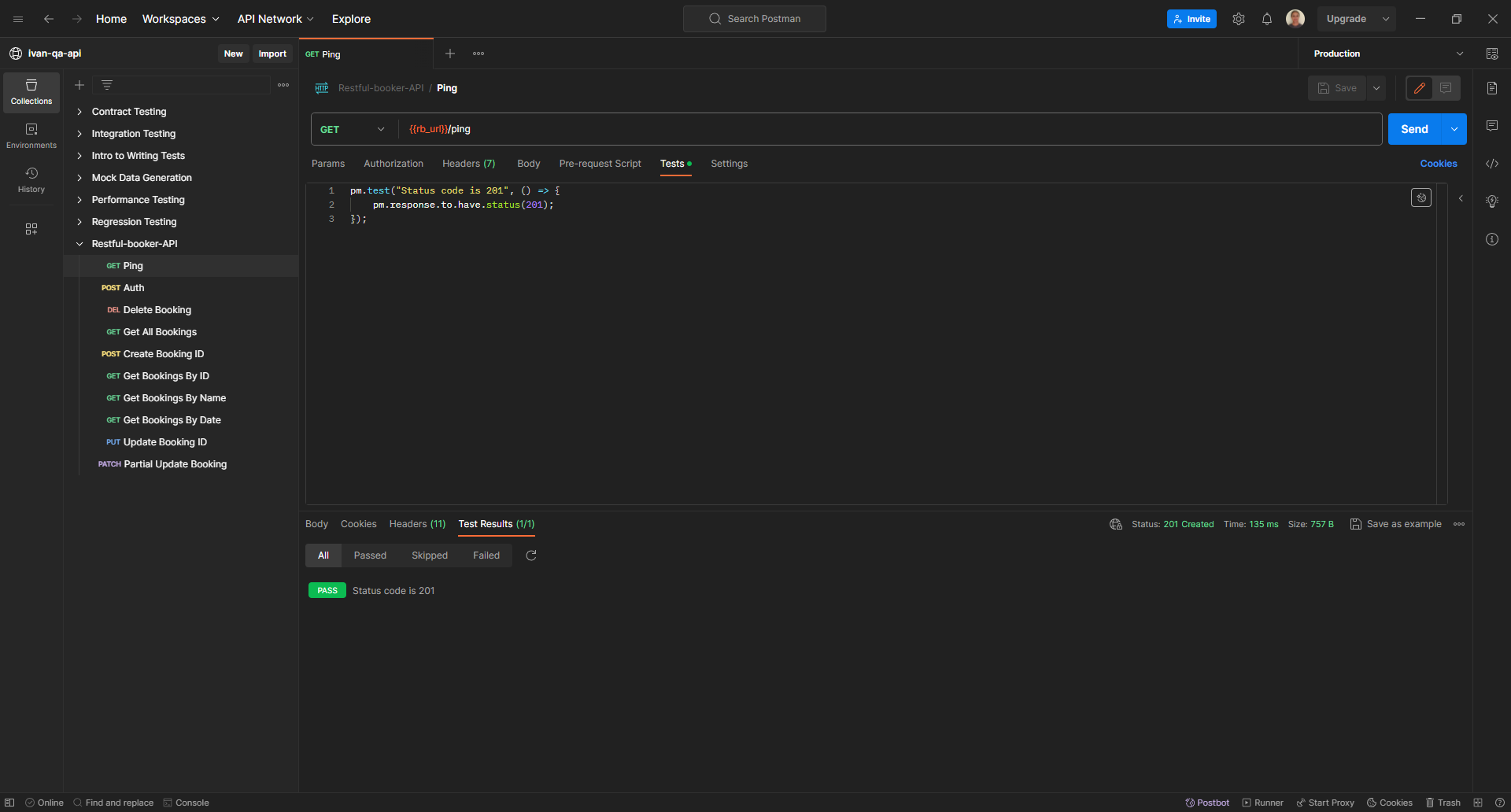

You can check my API test examples in this POSTMAN workspace