Test Strategy

Test Strategy Definition

By ISTQB glossary the definition of a test strategy is – a high-level description of the test levels to be performed and the testing within those levels for an organization or programme (one or more projects).

The process of writing a test strategy is more about thinking about risk factors within the project and planning to mitigate those risks, rather than ticking boxes to show that all types of testing have been included.

Types of test strategies

- Analytical strategy

- Model based strategy

- Methodical strategy

- Standards or process compliant strategy

- Reactive strategy

- Consultative strategy

- Regression averse strategy

1. Analytical strategy

An analytical test strategy is the most common strategy, it might be based on requirements (in user acceptance testing, for example), specifications (in system or component testing), or risks (in a proper risk-based testing strategy).

In a more agile approach, it might be based on none of those, but a common understanding of user stories, or even a mind-map. The point is not the form of the test basis, but that there is a specific test basis for the release that is analyzed to form a set of tests.

So, in case of testing based on requirements, requirements are analyzed to derive the test conditions. Then tests are designed, implemented and executed to meet those requirements. Even the results are recorded with respect to requirements, like requirements tested and passed, those that were tested but failed and those requirements which are not fully tested, etc.

2. Model based strategy

An increasingly popular strategy is a model-based test strategy. This combines specification-based testing with structure-based testing to create some model of how the software should work. This might be a diagram that shows the user route taken through a website, or a diagram that shows how different technical components integrate, or both!

This is a really interesting strategy because it allows for the best tool support. Creating a machine-readable model of the application, allows tools to generate manual or automated tests.

The models are also developed according to existing software, hardware, data speeds, infrastructure, etc.

3. Methodical Test Strategies

A methodical test strategy is when you use a standard test basis for different applications.

This might be for instance, because you test payments and the payment scheme provides a set of mandatory tests for a particular type of transaction in system testing. Or, it might be because you are doing application security testing and want to leverage the industry experience baked into the OWASP Application Security Testing framework. In case of maintenance testing, a checklist describing important functions, their attributes, etc. is sufficient to perform the tests.

4. Standards or process compliant strategy

Standards compliant test strategies are where you use industry standards to decide what to test.

This is most likely where you are in a specific regulated sector, like self-driving cars, or aviation, where there are specific standards you have to meet for testing. For example, code coverage in system testing might be a requirement, or testing specific scenarios in user acceptance testing.

In the case of a project following Scrum Agile technique, testers will create its complete test strategy, starting from identifying test criteria, defining test cases, executing tests, report status etc. around each user stories.

5. Reactive Test Strategies

Reactive test strategies are where you decide what to test when you receive the software. This can happen in exploratory testing, where things you observe on your testing journey drive further tests. So testing is based on defects found in actual system.

Another example is metamorphic testing of machine learning, which you could argue is reactive as one test depends on the next.

6. Consultative strategy

The name suggests, this testing technique uses consultations from key stakeholders as input to decide the scope of test conditions as in the case of user directed testing.

Sure, there’s scenarios where the functionality was too complex for me to understand and I had to ask other people about how to test, but I still applied test design techniques to what they said in my system testing.

The testers may then use different techniques like testing pair wise or equivalence partitioning techniques depending upon priority of the items in the provided lists.

7. Regression averse strategy

A regression-averse strategy is one where you just make sure nothing has broken since the last release and ignore the changes. There is a focus on reducing regression risks for functional or non-functional product parts. For example an infrastructure replacement where nothing is expected to change.

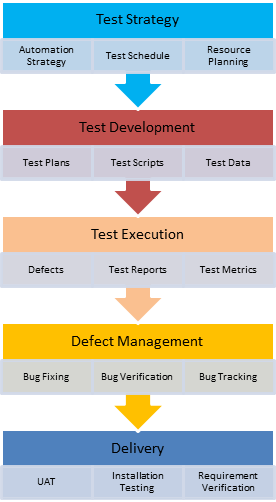

Test Strategy in SDLC

A regression-averse strategy is one where you just make sure nothing has broken since the last release and ignore the changes. There is a focus on reducing regression risks for functional or non-functional product parts. For example an infrastructure replacement where nothing is expected to change.

What should be included?

The key components of a good test strategy document are as follows:

- Test objectives and their scope

- Key business-led quality requirements

- Possible risk factors

- Test deliverables

- Testing tools

- Responsibilities

- Tracking and reporting of issues

- Configuration and change management

- Test environment requirements

Sample Test Strategy Document

1. Scope

The testing will be performed on the web application which includes the following functionalities:

- User registration and account authentication

- Account transactions and transfers

- Possible risk factors

- Test deliverables

- Testing tools

- Responsibilities

- Tracking and reporting of issues

- Configuration and change management

- Test environment requirements

2. Test Approach

The testing approach for the web application will include the following steps:

Test planning: The testing team will review the requirements and develop a test plan that outlines the testing scope, objectives, and timelines specific to functionalities.

Test design: The testing team will develop test cases and test scenarios based on the requirements and user stories. Test data will be identified, and test environments will be set up.

Test execution: The test cases will be executed using manual and automated testing techniques. The testing team will report and track defects, and retest fixed defects.

Test reporting: The testing team will prepare and share test reports that summarize the testing progress, the number of defects, and the overall quality of the application.

3. Testing Types

The following testing types will be performed during the testing of the web application:

Functional testing: This type of testing ensures that the application functions correctly according to the requirements. It includes the testing of user registration, account transactions, balance inquiries, bill payments, and loan applications.

Usability testing: This type of testing focuses on user experience, ease of use, and user interface design specific to operations.

Performance testing: This type of testing evaluates the system's responsiveness, stability, and scalability under different load conditions for transactions (eg. if we test banking application).

Security testing: This type of testing ensures that the application is secure from unauthorized access, data breaches, and other security threats.

Compatibility testing: This type of testing checks if the application functions correctly across different browsers, devices, and operating systems.

4. Testing Tools

There are several important tools used in almost every testing project:

- Test management tools

- Test automation tools

- Performance testing tools

- Security testing tools

- Code quality and static analysis tools

- Test data management tools

- Continuous integration and continuous delivery (CI/CD) tools

- Defect tracking tools

- Test environment management tools

- Usability testing tools

- Visual testing tools

5. Hardware - Software Configuration

The testing will be conducted on the web application across the following hardware and software configurations (based on the user and traffic analytics provided by the web development team or marketing team):

Operating systems: Windows, macOS, Linux

Browsers: Chrome, Firefox, Safari

Database systems: MySQL, Oracle, SQL Server

6. Reporting

The testing team will prepare and provide the following reports for the web application.

Test Cases Execution Report: Provides information on the execution of test cases, including:

- Pass/fail status

- Test case IDs

- Associated defects or issues encountered during testing

Defect Report: Contains details about the defects or issues discovered during testing, including:

- Description

- Severity

- Priority

- Steps to reproduce

- Current status (open, resolved, closed)

Test Summary Report: Offers an overview of the testing activities conducted, including:

- Number of test cases executed

- Pass/fail rates

- Test coverage achieved

- Overall assessment of the application's quality

Test Progress Report: Tracks the progress of testing activities throughout the project, including:

- Planned versus actual test execution

- Remaining work

- Milestones achieved

- Risks or issues encountered

Test Coverage Report: Provides insights into the extent of testing coverage achieved, including:

- Areas of the application tested

- Types of testing performed

- Gaps or areas requiring additional testing

Test Strategy vs Test Plan

| Comparison area | Test Planning | Test Execution |

|---|---|---|

| Purpose | Provides a high-level approach, objectives, and scope of testing for a software project | Specifies detailed instructions, procedures, and specific tests to be conducted |

| Focus | Testing approach, test levels, types, and techniques | Detailed test objectives, test cases, test data, and expected results |

| Audience | Stakeholders, project managers, senior testing team members | Testing team members, test leads, testers, and stakeholders involved in testing |

| Scope | Entire testing effort across the project | Specific phase, feature, or component of the software |

| Level of Detail | Less detailed and more abstract | Highly detailed, specifying test scenarios, cases, scripts, and data |

| Flexibility | Allows flexibility in accommodating changes in project requirements | Relatively rigid and less prone to changes during the testing phase |

| Longevity | Remains relatively stable throughout the project lifecycle | Evolves throughout the testing process, incorporating feedback and adjustments |

Conclusion

In Software Engineering, software release goes through Test Strategy documents from time to time to map the progress of testing in the right direction. When the release date is close many of these activities will be skipped, it is desirable to discuss with team members whether cutting down any particular activity will help for release without any potential risk.